Let's encrypt everything!

https is easy, simple, free, and soon to be "mandatory". All of my sites and services use https, always. This was done as a project over time quite a few years ago, combined with converting all of my sites and services to run on docker which I touched on in my previous post, and will be covering in the future.

Originally, everything ran on a couple of payed, catch-all wildcard certificates from startssl. After let's encrypt launched, I decided to move everything over to them. Not really to save money, but rather because I had a feeling that let's encrypt would be huge, and something that I really should learn more about as soon as possible. And boy was I glad I did when the whole startcom drama occurred.

In this post I will describe the technology I used to create a zero maintenance let's encrypt based system for all of my sites and services, including automatic http->http redirects, that is future proof (meaning that adding new services or sites require zero effort regarding https, as long as they run in docker). But first a few thoughts on https in general.

Why https is important, and something you need to know

Back in the days, https was something you needed if you were an online bank, doing e-commerce, running a secure corporate network, or something else "important". Some years ago, this started to change. It started as a trickle, but recently the faucet has been opened, and now everybody online needs to relate to it. If you publish stuff online or run any kind of online service, there is no way around it. If you are "only a consumer" you still need to relate to it, and if you don't you will be in trouble at some point. Hint: Do you always ensure any site you enter any personal information on is encrypted?

The main deal here is that you need to ensure that all of your personal information is secure online, including your browsing habits. These days, anything you do online without https (or when talking about non-web stuff, without encryption) is up for grabs by anybody between you and whatever end point you are accessing. This isn't anything new, but awareness of it is much greater, and it's no longer only the "nerds in the closets" who have access. It could be anybody. And if you are on a non-private wireless network (which is more and more common, wifi is everywhere), you can almost guarantee that the baddies have all of your unencrypted information.

In other words, doing anything unencrypted online today is a bad idea, period. But wait, you might say, I don't have anything to hide. Hint: Everybody has something to hide, you might just not think about it all the time. Would you post your VISA number and PIN-code online? Would you post your social security number online? Would you post all of your web history online? Even if you somehow exist in a parallel reality where you would post all of these things online, by not using encryption you are harming those whose lives depend on encryption being available. There are lots of people out there whose lives depend on being able to use encryption freely, such as dissidents in suppressive states and dictatorships, whistle blowers, people fleeing from persecution, abusive relationships or even the mafia. If you use encryption when you strictly don't feel the need to, you help create plausible deniability for those who do need it, and you help ensure encryption options exist. If you don't you indirectly harm them. Off course, this is an even broader area than just https. Encrypted email, chat, voice, etc. is just as important.

By always using encryption you are also protecting your self from spoofs, traps and tricks. There are lots of ways to attempt to fool you into believing you are visiting a different site than you actually are. The reasons can be many, including stealing your information, tricking you into clicking on ads to generate revenue for somebody, or installing viruses or malware on your computer. By always using https, some of, but of course not all of these tricks are foiled.

Finally, because of all of the issues mentioned above, browser vendors are starting to enforce the use of https. This includes showing a big red warning to all users that your site is not safe if you don't use https, blocking any new web functionality, so no https, no new web features will work on your site, and down right blocking you completely. The vendors started slowly, but are getting more and more aggressive, and we are most likely not far from a situation where https basically is mandatory for all presence on the web. The unencrypted web is soon to be broken. If you haven't already, now is the time to prepare.

Pros and cons?

There are two options. Embrace the change and be happy, or complain and make a fuss. But in the end, complaining will get you nowhere. This is happening, it's happening now, and it's happening fast. It (should) impact everybody, and nobody can change it. So embrace it instead. Hence I'm not going to say much about pros and cons. But one of each are worth mentioning.

The main pro is of course that this is needed. And it has been needed for a long time. So that this is finally happening is great.

The main con has to do with access to technology. Web publishing of simple content is easy, very easy. All you need is a server, or a computer that always is on, and a public IP-address or a forwarding service. In addition you need to acquire some simple knowledge of HTML and how to install a web server. That's it. But once https is defacto required for everything, since your published content will be banned by the browsers, much more technical knowledge is needed, and it is no longer a trivial task to learn. This is a shame since it hinders access to knowledge, and can kill the joy of learning by making the initial threshold higher.

Let's encrypt

To make the mentioned shift possible,the Mozilla foundation started working on a free, easy to use system called Let's encrypt. Today, a lot of major players sponsor the Let's encrypt. Https used to be expensive, and fairly unavailable. Let's encrypts goal was to change this.

Let's encrypt is free to use, and you can create as many certificates as you want for free. You can do it manually, but the certificates are only valid for three months at a time. Fortunately Let's encrypt provides a very good API that allows easy automation of certificate creation and renewal. Furthermore, basically the whole world of service providers and application developers have embraced Let's encrypt. Most applications or services either provide a plug-in TODO TAG PLUG-IN? or already have Let's encrypt built in. You'll also find multiple API-implementations for basically any programming language out there, even lisp.

Let's encrypt makes basic https free and available for everybody, which is great. Advanced use, the kind of use required by banks, e-commerce, etc. isn't covered, but that's ok. This service is for the broad public, not for the huge corporations.

Technical awesomeness

I promised you some technical goodies, and here they are. As mentioned in my previous post, I have converted all of my sites and services to run on docker over the last few years. I'm more or less in love with docker, and basically have my whole life in docker these days. As part of this process I converted everything to use https. Initially I used a master certificate I payed for, but as soon as Let's encrypt was mature, I decided to switch, a choice I'm very happy with. Fortunately, I decided to try to make a system that was automatic, and future proof. In other words, no maintenance needed, and adding new sites or services should be trivial.

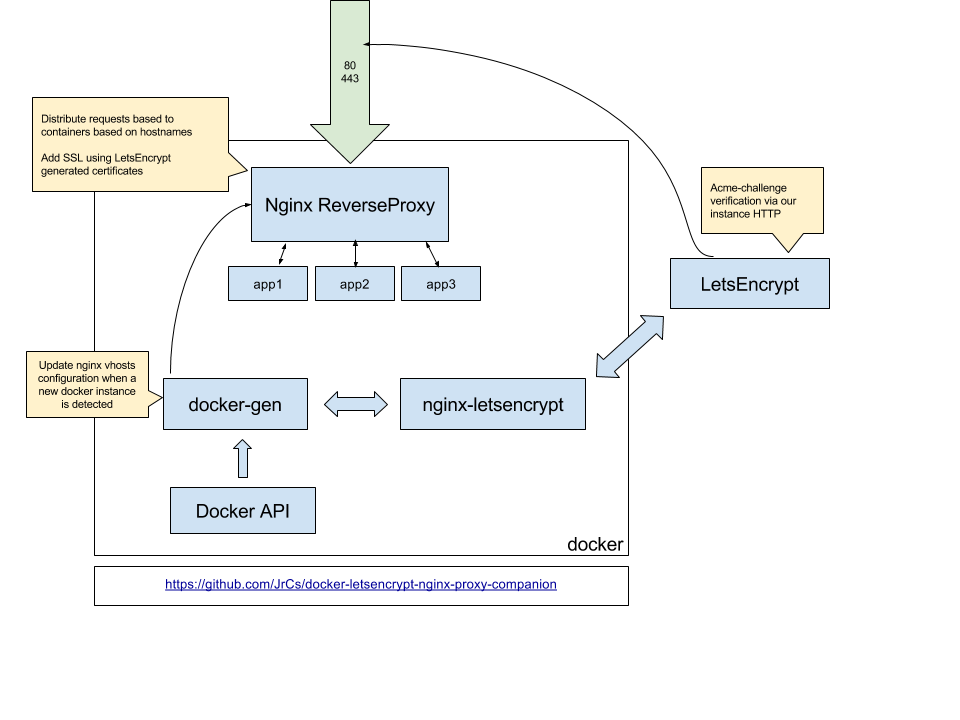

While converting all of my sites and services to docker, I also set up https for all of them. This was done using a single nginx instance running in docker using the fabulous docker image jwilder/nginx-proxy. This runs as a single nginx container instance, and listens to docker waiting for new containers to be started. By adding a few environment variables when spinning up a new container, you can instruct nginx-proxy that you want to be proxied with the given url. Nginx-proxy automatically detects this, automatically creates a proxy configuration, and reloads itself. Hence you can automatically add new services to your proxy by simply adding a few environment variables to the command when starting the service. No need for any manual configurations or restarting containers. Nginx-proxy handles everything.

Nginx-proxy has a single configuration copied and used for all existing and new proxied containers. In addition, you can specify individual configurations for individual containers (based on hostname) if needed, but naturally this process does require a reboot. If you already have existing purchased https certificates, you can add them to nginx-proxy, again identified by host name. This is how I originally did things. Finally, the proxy will, when you use https, ensure that any unsecure requests automatically are re-directed to secure requests. This can be disabled if you want to, though I cannot fathom why you would. Not only is this important to ensure the security of your users, but google will index your secure and non-secure sites as separate sites, so serving content (the same or different) on both can cause issues with your search rankings.

I plan to give a much more detailed description of the setup I've used for docker in a later post.

Adding Let's encrypt to the mix

Since I already had this system when I wanted to convert to Let's encrypt, I decided to investigate if I could find something that worked well with the system I already had. And fortunately I did.

The component I found was JrCs/docker-letsencrypt-nginx-proxy-companion. This nice little component runs as it's own container and hooks into the system described above. It allows using Let's encrypt as a part of the system, again by adding a few environment variables when creating a container, in the same way as with nginx-proxy. These environment variables instruct the companion to retrieve a certificate from let's encrypt if an existing valid certificate doesn't exist. The companion then handles the certificate generation, and instructs the proxy to use that certificate for the new container it has been instructed to proxy.

This makes adding certificates to the system easy as can be. All you need to do is add a couple more environment variables to the command you use to create your instance, and bobs your uncle.

The companion also ensures the certificates stay up to date—remember they are only valid for three months—without any manual interaction. In addition to the daemon listening for new containers, the companion runs a "cron job" that periodically checks the validity of the certificates in use. By default, any certificate that will expire in less than a month, will automatically be renewed.

Shortcomings of the setup

The only shortcoming I've found with the system, is an issue that most likely not many people will encounter. The shortcoming only applies to the Let's encrypt companion. Basically, the companion doesn't handle multiple instances very well. If you run multiple instances of it on the same system, they will all trigger on all actions, and try to create or renew certificates that they themselves don't "own", resulting in error messages, and unneeded communications with the Let's encrypt API. The system is still fully usable, but the added error messages in the log, and added traffic to Let's encrypt are annoying.

And in case you wonder why I would have two instances, the reason is I have individual nginx proxies listening to two different IP-addresses on the same machine. I initially had to do this to allow incoming webhooks from a system that couldn't handle wildcard certificates. This prompted me to set up a different IP-address on the same system, not only for webhooks from the mentioned system, but all incoming webhooks. Feels nice to be able to keep incoming webhooks separate from the rest of the system.

Should I do the same?

You might be planning on doing something similar yourself, and wondering if you should create a setup similar to the one I created. The answer to this is: probably not.

I learned a great deal and had a lot of fun while implementing the system, and I'm very happy with the result. I now have a stable, reliable and extendable system that requires little to no maintenance. However, getting there was not trivial. It required a lot of trial and error, reading documentation, reading code when documentation wasn't available (more often than not), and a couple of restarts from scratch.

Unless you actually want to do all of this tinkering (for fun, or for profit) or you want to learn more about docker, let's encrypt and nginx, you probably shouldn't go down the same route I did.

If you simply want something robust that works, you probably are better of going with a more "out of the box" system, such as Træfik. Granted, learning Træfik is probably just as much work as setting up the system I described above, but you'll get a whole lot more for free (such as a nice UI, clustering support, metrics, an API, etc.) You will also be learning something you much more likely will encounter in "the real world". Documentation is also a lot better, and I'm guessing that the technology most likely will exist longer into the future as well.

At some point I want to convert my system to use Træfik, and potentially also Kubernetes, but that is a task and a blog post for another day, and not something I highly prioritize at the moment.

If you only take one thing away from this blog post, I hope it's that you have to be conscious of https and encryption and actively relate to it, no matter who you are.